Last Tuesday it was finally time to move into my new colocation rack. During the last couple of days i was working on finishing the configuration because i was not able to prepare everything. Now that it is finished i am finally able to write this post.

Planning

Tuesday i started work in the morning at our office in Utrecht to drive to the datacenter after my lunchbreak, my colleague never went to a datacenter and asked if he could come with me. From there we would rack the hardware and connect the network,power cables. When everything was attached i could start configuring the final things like the site-to-site vpn and idrac.

Because i am renting this space through a different company they have to be present for me to work on the servers here, for this reason i want to spend as little time in the datacenter as possible. Get everything ready so i can access the hardware from home over the VPN.

My colleague picked up my other server from the rack my boss was renting the day before. I needed this server now because i am planning on using the 16 600gb sas drives that are in this server. The morning we would spend moving the drives over to their new Dell caddies. The week before he already attached an 5tb external usb harddrive to copy the VMs to that, we will attach that one to the new servers aswell.

First challenge

When we where in the datacenter working on racking the servers and netwerk hardware we found that the rails where not compatible with the R620 server. I bough the rails with the servers and never thought about checking if they where the correct ones. After some time discussing we decided to just rip the pins of that where in the way. Below you can see one of the pins still attached to the rail, the others we broke of :).

After breaking the pins of the servers fit nicely and we could continue our work.

Second challenge

We had everything mounted quite fast after fixing the rackrails but i could not get the netwerk and internet working. I was connecting to the switch with my console cable while being in the 'hot aisle'(Not a good plan afterwards :P), but could not access the switch over the network. After spending a good 2 hours debugging and resetting the switch i found the problem. I am using an Dell powerconnect 5524 switch that i had laying around but was part of a stack some time ago. Before we went to the datacenter i already prepared the configuration but noticed that the switch said it was number 2 in the stack, i just ignored it thinking it was some weird bug because it has been in a stack before. But while i reset the config multiple time it still thought it was switch 2 but i configured everything as if it was switch 1. After finding this 'mistake' i quickly redid the config for switch 2 and got internet working.

Result

It all took way longer than expected but after around 4 hours working we where done for the day, i could access the firewall,switch,idrac over my vpn so i could finish the config from home.

The first thing on the list was getting my vcenter server running again and setup VSAN. So i added the 2 servers to vcenter and the already existing distributed switch to finish the network config. Then created the cluster and moved the first server into it, than vmotioned the vcenter server to that so i could add the second server to the cluster. I had already prepared a vsan network with routing to another site to be used for the witness node, so i installed the witness node ovf to enable vsan. Setting up vsan was alot easier than i first thought and was quickly migrating my vms of the usb storage onto the new vsan datastore.

The move took quite long but after it was done i booted up the VMs, changed some dns records and everything was working again. Because i used the same distributed switch i did not have to change any networks for the VMs. The cluster itself is now finished and fully working, the next week i will change my network scheme and tidy the whole homelab up. I will try to post as much of this as possible but i will also start my new job so might not have that much time(or motivation) left to write these posts :).

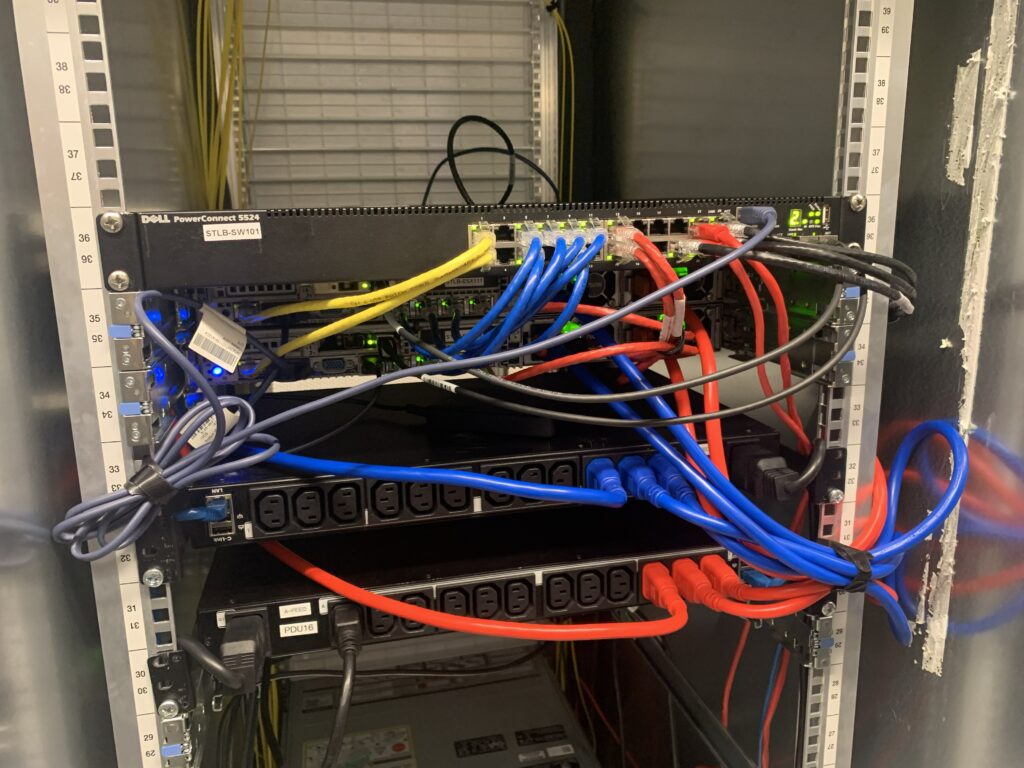

Below some pictures of my new datacenter location:

I will come back soon to tidy up the cables some more

Completed setup:

Hardware

2x Dell PowerEdge R620

- 2x Intel e5-2640v1

- 240gb ddr3 ecc registered

- 8x 600gb 15K sas

- 1x 500gb nvme ssd

- Dell H310 mm flashed to IT mode(One has an H710 flashed to IT because one of the H310 died.)

- Broadcom dual port 10gbe nic

- Broadcom quad port 1gbe nic

Dell PowerConnect 5524 - 2 port lacp uplink to the internet

- Servers connected with 3 port lacp

Cisco asa 5506-x - 1 uplink port

- 2 port lacp trunk

Plans

Some plans i have for the cluster:

- Dell Optiplex 3040+ as an extra esxi host for backups and vsan witness

- Nvidia Tesla M4/P4/T4 for gpu acceleration

- New e5-2697v2 CPUs(Already have one pair)

- 16gb of memory for each nodes to bring the total up to 512gb

- swap both raid controllers for H710p in it mode(Already have both controllers)